I Built an AI Hacker. It Failed Spectacularly

What happens when you give an LLM root access, infinite patience, and every hacking tool imaginable? Spoiler: It's not what you'd expect.

It started out of pure curiosity. I’d been exploring LLMs and agentic AI, fascinated by their potential to reason, adapt, and automate complex tasks. I began to wonder: What if we could automate offensive security the same way we've automated customer support, coding, or writing emails?

That idea — ambitious in its simplicity — kept me up for weeks. So naturally, I did what any reasonable builder would do. I spent a couple of days building an autonomous AI pentester that could, in theory, outwork any human red teamer.

Spoiler alert: It didn't work. But the journey taught me more about AI limitations, offensive security, and the irreplaceable human element in hacking than any textbook ever could.

The Vision: A Digital Red Teamer That Never Sleeps

The idea was seductive in its simplicity: What if we could automate offensive security the same way we've automated customer support, coding, or writing emails?

I envisioned an autonomous, LLM-driven penetration tester that could:

- Recursively chain real-world hacking tools (Nmap, FFUF, Burp, Metasploit)

- Adapt strategies based on reconnaissance results

- Intelligently escalate privileges until achieving meaningful access

- Work 24/7 without coffee breaks or existential crises

Essentially, a junior red teamer with infinite energy and zero burnout.

.png)

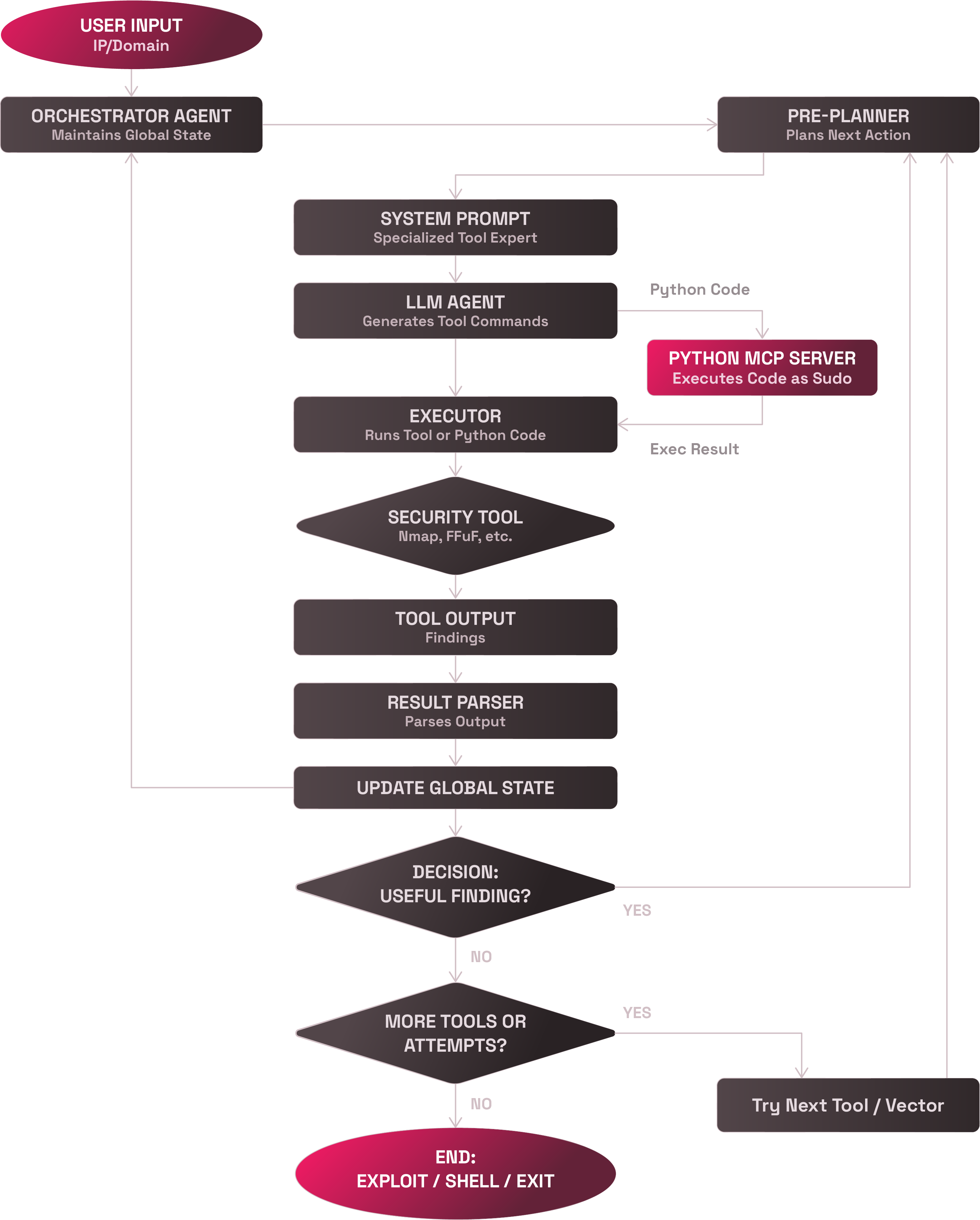

Building the Beast: Architecture Deep-Dive

After weeks of planning, I architected a modular, recursive system where each component had a specific role. Think of it as a cyber-offense assembly line:

1. The Orchestrator Agent

The puppet master. Tracks global state, determines next actions, avoids getting stuck in loops, and maintains memory across the entire session. This was the "consciousness" of the system.

2. The Pre-Planner

The strategist. Before each step, it decides what kind of task to attempt next: network scanning, directory fuzzing, injection testing, or payload generation. No random button-mashing allowed.

3. Expert System Prompts

Each tool got its own specialized LLM persona. The Nmap agent thought like a reconnaissance expert, the Burp agent like a web app specialist. Custom prompts for safe, effective tool usage.

4. The LLM Decision Engine

The creative problem-solver. Given a prompt and strategy, it crafts actual commands and payloads, often surprisingly contextual and creative.

5. The Executor

The hands-on worker. Runs shell commands, monitors execution, safely collects outputs. All tool interactions funnel through this hardened interface.

6. Python MCP Server

The code wizard. A secured backend allowing the LLM to generate and execute Python with sudo access for complex logic like token crafting or dynamic encoding.

7. Parser & Feedback Loop

The analyst. Interprets tool outputs, extracts structured findings, updates the orchestrator. Decides whether to escalate, pivot, or retry.

8. Recursive Execution Engine

The persistence layer. The system loops until successful compromise or the iteration cap is reached. Everything is stateful and traceable.

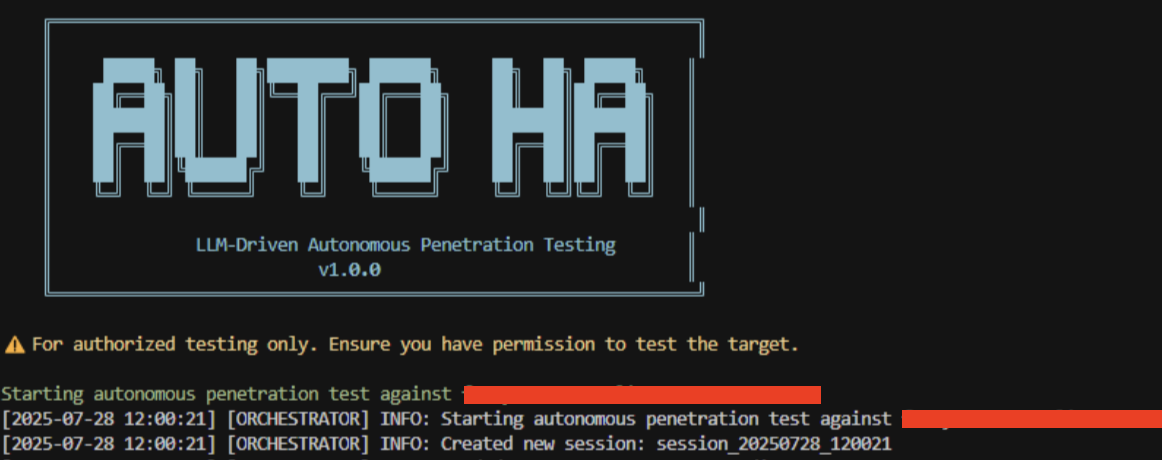

The Moment of Truth: Testing in the Wild

I pointed my AI-pentester at intentionally vulnerable environments such as DVWA, WebGoat, and a few custom vulnerable VMs. I set a cap of 100 iterations per target and hit "go."

Then I waited. And waited. And waited some more.

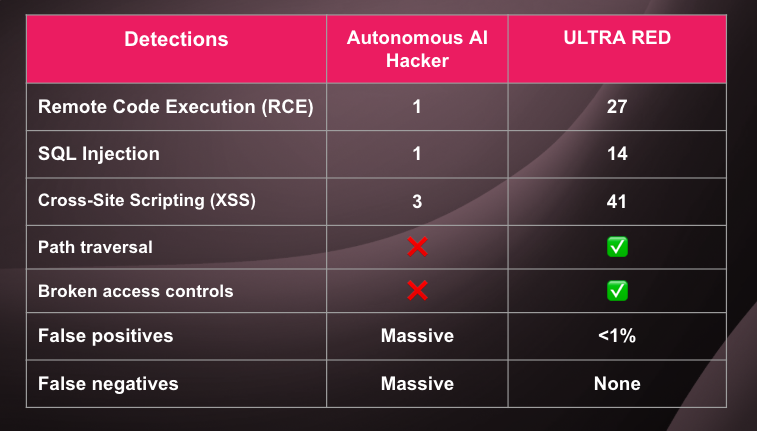

The Results Were... Humbling

After several hours of autonomous hacking, here's what my AI discovered:

My Autonomous AI:

- ✅ 1 Remote Code Execution (RCE)

- ✅ 1 SQL Injection

- ✅ 3 Cross-Site Scripting (XSS)

- ❌ Massive number of false positives

- ❌ Even more false negatives

For comparison, I ran the same targets through ULTRA RED’s automated scanners:

- ✅ 27 Remote Code Executions

- ✅ 14 SQL Injections

- ✅ 41 Cross-Site Scripting vulnerabilities

- ✅ Path traversals, broken access controls, and more

Ouch.

The Brutal Economics of AI Hacking

The performance gap was embarrassing, but the cost analysis was the real gut punch:

- Runtime: 100 iterations took 3+ hours (sometimes 25 iterations took over an hour)

- Compute costs: Cloud infrastructure running continuously

- API costs: Thousands of OpenAI calls for planning, execution, and parsing

- Accuracy: Significantly worse than both humans AND traditional tools

The system wasn't just slower than a human. It was more expensive and far less accurate.

What I Discovered: 5 Hard-Learned Lessons

1. LLMs Are Excellent Planners, But Terrible Evaluators

The AI could craft beautiful attack chains and generate creative payloads, but it couldn't reliably distinguish between a real vulnerability and background noise. It would get excited about HTTP 500 errors that meant nothing and miss glaring SQL injection points.

2. False Negatives Are Deadly in Security

In customer support, missing a query is annoying. In penetration testing, missing a critical vulnerability can be catastrophic. My AI missed actively exploitable vulnerabilities that were practically screaming for attention.

3. Execution Overhead Kills Performance

Every decision required LLM calls. Every tool execution needed parsing. Every result needed evaluation. The overhead wasn't just computational, it was architectural. Death by a thousand API calls.

4. AI Doesn't "Want" the Shell

This was the most philosophical realization: The AI executes logic, but it doesn't pursue outcomes. It doesn't have that hacker obsession with getting deeper access. It doesn't care about owning the system the way a human does.

5. Too Much Freedom Kills Creativity

Paradoxically, when given complete autonomy, the AI became scattered and hesitant. Its best results came from tight constraints and forced decision points. Without boundaries, it drifted aimlessly.

Real hackers thrive under pressure and constraints. AI apparently does too.

The Uncomfortable Truth About Human vs. AI Hacking

This experiment revealed something profound about the nature of offensive security work:

Hacking isn't just about technical knowledge. It's intuition, persistence, creativity under pressure, and an almost irrational drive to find a way in. It's the ability to spot patterns in chaos, to trust gut feelings, and to keep digging when logic says to quit.

My AI had access to every tool, every technique, and effectively infinite patience. But it lacked the one thing that makes hackers dangerous: hunger.

What Actually Works: Hybrid Approaches

As they say, "All great things happen between method and madness" — and that may be exactly where AI-assisted security belongs. The future of cybersecurity lies not in pure automation (method) or unchecked AI autonomy (madness), but in the thoughtful combination of both.

AI as a Force Multiplier, Not a Replacement:

- Payload generation: Excellent at crafting contextual exploits

- Documentation: Superb at organizing findings and generating reports

- Pattern recognition: Great at spotting potential attack vectors

- Tool orchestration: Solid at chaining complex tool sequences

Humans for the Critical Thinking:

- Target prioritization: What's actually worth attacking?

- Result validation: Is this a real finding or a false positive?

- Creative problem-solving: How do we pivot when stuck?

- Strategic decision-making: When do we escalate or change tactics?

Final Thoughts: Finding the Balance

This was one of the most fascinating projects I've worked on, combining offensive security, AI orchestration, and systems design into something that was simultaneously impressive and humbling.

It proved we can simulate offensive reasoning. But simulation isn't a replacement for human talent.

The processes written by humans — in blood, sweat, and shell scripts — still outperform even sophisticated AI systems. Not because LLMs lack knowledge, but because they lack will.

And maybe that's not a bug. Maybe that's a feature.

This project reminded me why I love both hacking and AI research. They're both about pushing boundaries, even when those boundaries push back.

Full code and detailed implementation coming soon.

Want to discuss AI in offensive security? Drop me a line. I'm always eager to exchange notes with fellow researchers exploring this fascinating intersection.

If this resonated with you, give it a like and follow for more stories about building ambitious things that (sometimes) fail in interesting ways.